Opening the Eyes of the Beholder

The Need for Cultural Responsivity in Arts Evaluation

In February 2018, the portrait of former First Lady Michelle Obama was unveiled at the National Portrait Gallery in Washington, D.C. Within this institution of power, a Greek Revival building lined with marble floors and white columns, images of presidents and other US leaders are captured in traditional oil paintings. In envisioning their own portraits, the Obamas made bold choices, which differed from most of their predecessors’ in the artists who were chosen to paint them and the styles in which they were portrayed.

Like most art, which allows for a range of interpretations, Michelle Obama’s portrait provoked great joy and much discussion — but also side-eyed questions. As people flocked to the gallery, lining up to see the painting, far away and as the image was shared online, comments and questions ensued: It does not look like her. It doesn’t look like the other portraits there. Why is her skin painted in gray?

Within the arts community, even among those who revere this former First Lady, the dialogue quickly shifted to the context in which this art was created: Who is the artist? How and why was she selected? What was the artist’s intention when creating the painting? Among the topics circulating on social media were posts about the background of artist Amy Sherald, her style, influences, and choice of a color palette, and how this portrait contrasts with the institution and other paintings in its collection of First Ladies. Inquiry broadened to consider viewers’ perspectives: Why were there lines wrapped around the building, with people even planning trips to D.C., to witness this depiction? What is the range of viewers’ experiences on seeing the First Lady’s portrait?

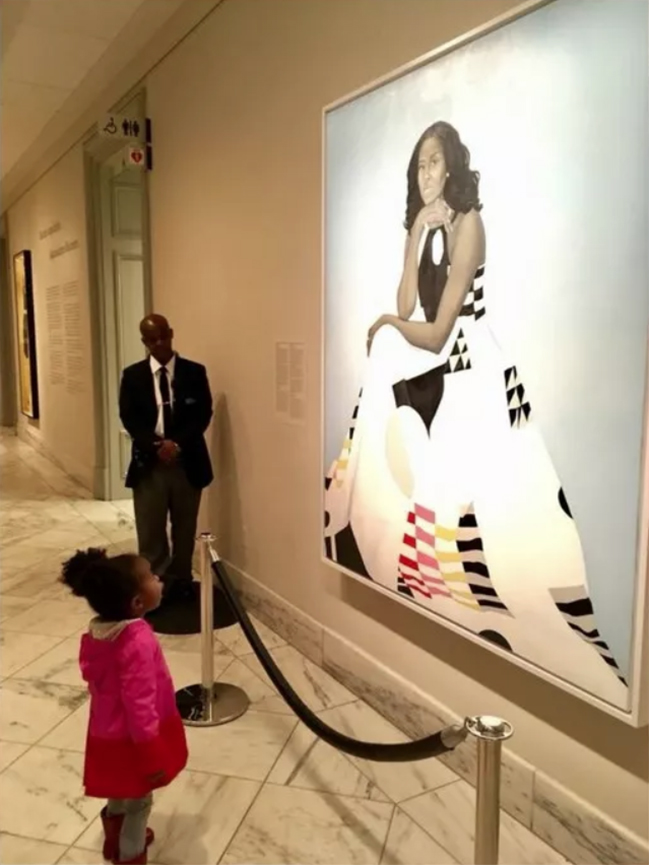

A young girl photographed by Ben Hines at the National Portrait Gallery admiring the official portrait of First Lady Michelle Obama. The photo went viral and led to a dance party with Mrs. Obama.

The views of and reactions to Michelle Obama’s portrait supersede politics. The range of experiences that viewers bring are based on their cultures, including life experiences, belief systems, customs, and opinions of what is “good” or even “appropriate” art. Some rush to express unconditional love, while others, including people who revere the First Lady, may have recalibrated their expectations.

Arts practitioners are comfortable applying a range of interpretations and contexts to paintings in galleries. But we don’t commonly exercise the same practice when designing and conducting evaluations to learn about the context and circumstances in which programs operate.

There are ways to apply this practice to evaluations, thanks largely to the work of Culturally Responsive Evaluation and Assessment (CREA), led by founding director Stafford Hood, with Rodney Hopson, Henry Frierson, and a growing circle of colleagues in the United States and internationally. These experiences with the Obama portrait, in their complexity and nuance, illustrate the issues that evaluators address in conducting Culturally Responsive Evaluations (CRE), an approach in which the evaluation’s goals, research questions, and design are informed by the broader culture(s), context(s), and system(s) in which programs operate.1 In this article, I introduce CRE, compare it to some of the common evaluation practices within the arts field, and illustrate how CRE can be applied to the arts field.

Evaluations, at their best, help us shine the light on a program, need, or issue — much like art does. But that is in theory more than practice. Instead, funders commonly inherit and use preexisting evaluation methods and standardized, often quantitative, data collection systems. Operating within limited capacity, timelines, and budgets, the ubiquitous online portal serves as the panacea for data collection. But in what ways is the mandate for consistent, readily available data limiting impressions and perspectives of programs, including the ways in which they operate, the struggles that organizations encounter, and the lives they touch?

To grasp the importance of culture and context to evaluation, one only needs to consider how in our current evaluation systems and world the constant barrage for feedback leaves us skeptical of providing information and of how it will be used. We all grapple with survey fatigue in almost every aspect of life; one cannot book a flight or order coffee without being asked to rate the experience. We have all been asked for interviews from strangers who — if we agree to the interview — ask questions that don’t make sense or reflect our experiences; we wonder if and how our information will be used for any purpose other than improving product ratings. And we all have attended meetings when a facilitator or leader has declared the meeting environment to be “safe,” only to request information that may be uncomfortable or complex and therefore does not lend itself to group discussion. In these instances, the research shapes a story but perhaps not the most authentic one.

The Center for Culturally Responsive Evaluation and Assessment

In contrast, Culturally Responsive Evaluation requires that the studies position culture as central to the research process and take seriously the influences of cultural norms, practices, and ethnicity. Though CRE is not new, it may be to the arts field. The approach is developed and championed by CREA, whose renowned researchers strive to “generate evidence for policy making that is not only methodologically but also culturally and contextually defensible.”2 Based at the University of Illinois at Urbana-Champaign, CREA is the “current manifestation,” states Hood,3 of a decades-long movement based on emergent research on pedagogy, which argues that student learning is better assessed with culturally responsive approaches.4 Historically, its scholarship can be traced to before Brown v. Board of Education, to “a group of early ‘evaluators’ who used CRE methods to illustrate the discriminatory and racialized effects of segregation in the US South.”5 The organization promotes a “culturally responsive stance in all forms of systematic inquiry,” including evaluation, research, and analysis, that “recognizes issues of power, privilege and intersectionality.”6 Through its annual conference and other programs, CREA creates opportunities for continuing discourse, professional development, applications of its approach, and feedback to those who are dedicated to its use.

Within the collective work of the CREA community scholars and practitioners have taken an “unequivocal stand” around a core principle: “Without the nuanced consideration of cultural context in evaluations conducted within diverse ethnic, linguistic, economic, and racial communities of color, there can be no good evaluation.”7 They call on higher education as well as funders and government to acknowledge the “inextricable” connections between culture and evaluation.8

CRE Fundamentals

Based on the work of CREA leaders, the components of Culturally Responsive Evaluation are highlighted below and applied to the arts field.9 Though these components are a standard part of good evaluations and ideally used within participatory and developmental approaches, they become culturally responsive as deeper questions explore the culture(s), context(s), and system(s) in which a program operates, which in turn influence all aspects of the evaluation’s design and uses. Applying CRE principles to evaluations can deepen arts funders’ learning about the cultures and communities that their programs are dedicated to supporting.

1. Prepare for the evaluation, and engage stakeholders.

As with other approaches, CRE begins by framing the reasons for and circumstances surrounding the evaluation. But the “important dimensions of cultural context” set the stage for the evaluation, including the formation of the evaluation team.10 Such dimensions may include the history of the organization and place/community in which it operates. Among the questions that must be asked are: “Who are viewed as stakeholders, how they are best reached, where does the evaluation stand in relation to each of them, and what are the appropriate ways to enter into conversation with each of them?”11 Most importantly, CREA urges stakeholders to ask themselves: “How is power held, exercised and either shared or used oppressively in this context? Whose values are marginalized and whose values are privileged?”12

In identifying an evaluation team, CRE emphasizes the consideration of these cultural dimensions, including the “personal and professional fit between evaluators and stakeholders.” Evaluators’ life experience, language, and technical expertise should match the needs of the community in which the program happens. A crucial dimension is whether “cultural insiders,” or gatekeepers, will be needed to provide access to program participants. At this early phase in building relationships and trust between evaluators and stakeholders, evaluators must spend time in community with stakeholders, learn about the culture(s) in which the evaluation is happening, and set the right pace for building relationships.13 Katrina Bledsoe, a CREA advisor, believes in “letting stakeholders tell me how they want to interact.”14 Hazel Symonette cautions evaluators to first assess their sense of self, which in turn influences how they relate to communities:

We need to understand who we are as evaluators and how we know what we believe we know about others and ourselves…. [so that the help we offer] moves beyond deficit-grounded presumptions of intrinsic brokenness and weakness…

…evaluator roles typically confer social powers to define reality and make impactful judgements about others. These judgement-making responsibilities place us among society’s privileged authorities.15

Power Dynamics and Selection of Evaluators. Arts funders are generally cognizant of the power dynamic that exists between their institutions and the organizations and communities they support. But CRE charges them to more actively incorporate that dynamic into evaluation design and relationship building with grantees. If funding staff are not known within a community, then among the first steps are visits by staff, probably informal and repeated. CRE involves, according to its leaders, “listening carefully, stepping cautiously, and gathering information on which relationships can be built.”16 Whereas a traditional evaluation might prioritize the assumed “objectivity” of an “outside” evaluator, CRE evaluators know that the more removed the evaluator is from a program, the more limited is their ability to understand its context and present a valid and credible picture of it.

Context around Place. A scenario common during gentrification illustrates the importance of context in collaboration among arts organizations. Suppose that several funders have supported arts organizations located in a part of town that is gentrifying. Local residents who patronized and supported the organizations, both in-kind and financially, are being priced out of their longtime homes, as subsidized housing is replaced by gated condominiums and high-priced rentals. A YMCA that offered arts classes is replaced by a high-end grocer, and a church that offered evening performances is replaced by a dog park. Though never financially stable, arts organizations are now struggling to survive, and funders want to know what would (or would not) be helpful. In a planning study I conducted with a similar community and context, stakeholders, including teachers, ministers, and nonprofit heads, repeatedly explained how longtime residents’ subsidized apartment buildings had been intentionally condemned, so that developers could conveniently entice them with relocation “vouchers” to move to distant suburbs, leaving residents with no understanding that they would now be responsible for paying high utility bills. This community displacement raised understandable skepticism about the motives of new arts organizations that wanted to be located there and any “help” that was offered.

Context around Gender. Recent dialogues with transgender and gender nonconforming artists reinforce the importance of never making assumptions about one’s knowledge of a community or its context. Both individual conversations as well as trainings and discussions at national conferences served as a wake-up call and deepened my understanding. Here are a few examples. Through Dance/USA, from choreographer Sean Dorsey, I learned that transgender and gender-nonconforming dance artists typically do not set foot in dance studios due to their understandable concerns about having no place to change clothes, sensitivities about touch, as can happen in improvisation or partnering, or being expected to wear costumes that do not fit their gender identity. Through the Association of Performing Arts Presenters, artist T. Carlis Roberts spoke of how travel for transgender and gender-nonconforming people can be dangerous, as they encounter hysteria by TSA agents during security pat-downs and are refused hotel rooms if their legal names do not match the stereotypes for their physical appearance.

2. Identify evaluation purposes, and frame the right questions.

Evaluations are commonly conducted as a condition of funding or to gain knowledge about a program’s planning, including its expansion or restructuring. Sometimes evaluations are conducted amid political controversy, including changes in funding or gentrification. In what is seen as the most important procedural step, evaluators must “set the parameters of what will be examined” which, by default, determines what will, and therefore will not, be examined.17

In framing questions, stakeholders ask themselves whose values and interests their questions represent: those of themselves, funders, or culture(s) being studied. They must decide “what will be accepted as credible evidence” in data collection.18 Among factors to be considered are whether questions “address issues of equity and opportunity,” as well as “explore who benefits most and least” from a program. Finally, questions must be broad enough to permit multiple ways of responding.19

Purpose. Within the arts (and other) fields, when evaluations are conducted under the guise of “effectiveness” or “impact of investment,” these words can seem like euphemisms for renewing or discontinuing funding, particularly if such ambiguous terms are not defined. During evaluations, a common concern expressed by community members is whether new partners are committed to solving community problems over the long haul or are merely offering some short-term funding. This concern plays out in another euphemism, in the form of a telltale question that residents ask: “How long are you here for?” implying that such “help” may be available only for as long as a grant lasts.

Questions. A common scenario when organizations offer ticket subsidies illustrates the importance of questions. When provided to an organization based within a community it intends to serve, such subsidies may be valuable. But as a funder, I have encountered times when if subsidized tickets do not sell, grantees simply state in final reports that the intended audiences “did not come,” with no apparent understanding of why not. A culturally responsive evaluation would question and examine the barriers to attendance and their effects on ticket access. Such barriers to participation may include transportation (for example, in my town getting from the suburbs to one of the large venues can take two buses and two trains), lack of child care (including funds to pay for it), and not knowing how to get to the facility and where to park (if one has a car and is willing to drive). But other barriers arise when people anticipate feeling uncomfortable or unwelcome at the venue particularly if they are new to it or — as I learned from a recent grantee — when people think that they do not have suitable clothing to wear to an arts event.

3. Design the evaluation, select instruments, and collect data.

Design addresses what information is needed, where it exists, and how it is best gathered. As with other forms of evaluation, data collection methods may vary but should be “consistent with the values of the community.”20 Using multiple methods to gather information may form a more nuanced picture of the program, the community in which it exists, or the issue to be addressed. In selecting methods, care should be taken to select a range that will provide a more complete picture of a community than is possible with, for example, a single survey. Often what is critical to the validity of the evaluation are meaningful conversations with leaders or program beneficiaries.

Instruments must be reviewed and validated by the audience by which they are used and take into account factors such as language and literacy levels, comfort with oral versus written communication, and whether nonverbal communication will be included. Bledsoe describes the movement, of late, to place community members at the forefront of instrument development and data collection.21

As CREA posits, “Data collection under CRE emphasizes relationship. The cultural locations of members of the evaluation team shape what they are able to hear, observe, or experience.”22 Data collectors must understand the cultural context of the study, as well as any technical procedures; “shared lived experience is often a solid grounding” for collecting data. Collectors make clear whether providing information is voluntary and whether it will be safeguarded, allow adequate time for introductions, and proceed at a pace that is acceptable to the community.

Data Collection. We conducted an evaluation of a community-based arts organization that launched a program to teach media skills to teens, many of whom lived in unstable home environments and were largely responsible for their livelihood and transportation. Rather than center on program impact in a general way, the study and instruments were designed to better understand the life circumstances of the teens and the role played by the program through their own perspective. Over pizza, as teens described their daily routines and challenges, they stressed the role of the arts organization as central in their lives not only through its training, but through connections to internships and relationships built with potential employers. Moreover, they explained how the program served as a nexus for friendships to develop, providing teens with both a place to go and a sense of family. Staff provided context for student feedback, as they were familiar with the lives of every student in the program and knew family members and circumstances.

4. Analyze data, disseminate findings, and use results.

Again, in analysis, cultural context influences the meanings and any conclusions drawn from the data, ultimately increasing its validity. CRE encourages examination of outliers and unexpected results, as well as variances in how the program is received by subgroups of participants. CRE advises that cultural interpreters and stakeholders may be needed to capture nuances and mine the multiple meanings that may be present in the data. Finally, the evaluation team should “notice how the evaluator lens (values, experience, expectations) shapes the conclusions.”23 Symonette describes this lens, using the metaphor of a “double-sided mirror,” as encouraging evaluators to address what “self-assessment reveals about their capacity to design and provide evaluation services that leave primary stakeholders better off.”24 In disseminating information, evaluators guard information that should not be shared, and remain aware of who benefits, or may be harmed, from sharing results. To accurately interpret data, cultural context must be understood and incorporated.

Data Interpretation. In an evaluation conducted for another arts training program, a range of data was collected from all teaching artists and groups of children who were at different levels in their training. In discussions with the youngest, mostly African American children, one of the facilitators (an African American woman) received different responses to questions than did I (a white woman), when a few children described the organization as racist. Despite our urging, an additional, comparative focus group did not happen. In interviews, teaching artists understood and provided context for children’s comments, explaining how perceptions of class differences played out within the organization. It seemed that students were judged depending on their level of training, where they lived, and how they traveled to training. Even then, it created tension to use the word racist within an evaluation, and without additional research our findings were inconclusive.

Data Form and Use. In an evaluation of a different, after-school arts program, data were triangulated from the perspectives of children, teaching artists, staff, and the parents of children who studied there. Data collection took into account the circumstances needed to ensure participation from parents, which meant holding focus groups in the evening to accommodate participants’ work schedules, addressing the need for Spanish translation, and providing a meal. Over lasagna, as family members translated, mothers and aunts shared stories and emotions about kids’ progress. The sense of love for the program, facility, and staff was palpable in the room. Yet despite the preponderance of evidence, the organization’s leadership still longed for a simple number that would “prove” and summarize the impact of the program on students in a form that could be shared with policymakers and fit in an infographic.

In Closing: Portraits

On Memorial Day, I visited the National Portrait Gallery to view the Obama portraits. In the morning, as I climbed the steps to the building, I heard a voice, quietly proclaiming, “This is fun.” I looked up and into the eyes of an older woman, standing at the top of the steps with her two adult daughters. Beaming, she asked me a question: “Is it usually like this?” I replied, “What do you mean by this?” She gazed out and waved her hand toward the sides of the building at the growing lines of elders, families, and babies of various sizes and skin colors. I responded that in the over thirty years I had lived in D.C., I recalled no such lines for any presidential portrait. (I needed the context for her question, and she needed the context for my response.)

As the doors opened and security guards waved us in and clicked crowd counters (quantitative attendance figures), two things happened within the many lines. First, people at once rushed and slowed their pace, eager to get to the portraits but seemingly determined not to break in front of others. Second, though we were in a gallery, it was loud, with constant chatter and the sounds of children. As we reached the rooms where the Obama paintings hung, a behavior pattern spontaneously formed: as visitors stepped up to the painting, they were allowed quiet time with the work; then, as they turned around, the next visitor in line offered to take photos of them in front of the portrait. Then, these visitors — strangers to each other — stepped aside, huddled and talked, asking each other what they thought of the painting, and where they were from. Smiles were exchanged, and eyes watered, as babies crawled around the floor. The woman I met at the door appeared again and asked if the gallery is usually “like this,” pointing to the velvet ropes that cordoned off hundreds of people as they waited. I replied again, not usually, and then we shared our stories. She had raised her two daughters in Hawaii, where they attended the same school as President Obama; one went on to win a Tony award, and the other had just retired from the corporate world in the Twin Cities. She spoke of the value of good schooling. We reflected on the beauty of the people in the paintings, the likenesses captured by the artists who portrayed them, and the difficulty of living in current times.

That Saturday was about more than viewing paintings. It was an event, a party among strangers, that broke some of the “rules” in galleries about how we interact with art and with each other. Certainly, it was about the context for these presidential paintings and the times in which we live. The gallery became a space for people from different places and cultures to tell their story of how and why they were there, how they felt, and what they thought.

Culturally Responsive Evaluation urges us to do the same thing. As we behold programs, it requires us to consider context and culture — to open our eyes and sharpen our senses. As we relate to community members and hear their stories, we compose fuller portraits that humanize our programs. It is a deep, lifelong process.

Suzanne Callahan founded Callahan Consulting for the Arts in 1996 to help funders and arts organizations realize their vision. She has administered national funding programs for over twenty-five years. Her book Singing Our Praises: Case Studies in the Art of Evaluation was awarded Outstanding Publication from the American Evaluation Association for its contribution to the theory and practice in evaluation.

Acknowledgments go to Katrina Bledsoe, for contributing to this article; to Stafford Hood, for his interview; as well as to other contributors to CREA.

NOTES

- Henry T. Frierson, Stafford Hood, Gerunda B. Hughes, and Veronica G. Thomas, “A Guide to Conducting Culturally Responsive Evaluations,” in The 2010 User-Friendly Handbook for Project Evaluation, ed. Joy Frechtling, 75–96 (Arlington, VA: National Science Foundation, 2010).

- Center for Culturally Responsive Evaluation and Assessment (CREA), Mission Statement, https://crea.education.illinois.edu/home/about/mission, accessed June 25, 2018. CREA was founded by Dr. Stafford Hood and is based at the University of Illinois at Urbana-Champaign.

- Stafford Hood, telephone interview by author, June 29, 2018.

- CREA cites two interrelated streams of educational research: that of Robert Stake, from 1973, who articulated the concept “Responsive Evaluation” to emphasize stakeholders, and that of Gloria Ladson-Billings, in the 1990s, who coined “Culturally Relevant Pedagogy,” and who, with others, argued for its use to improve the achievement of students from ethnically diverse backgrounds. For more information, see “Contemporary Developments in Culturally Responsive Evaluation: 1998 to the Present,” Origins, CREA, https://crea.education.illinois.edu/home/origins.

- “Contemporary Developments in Culturally Responsive Evaluation: 1998 to the Present,” Origins, CREA, https://crea.education.illinois.edu/home/origins, accessed June 25, 2018.

- CREA, Mission Statement, https://crea.education.illinois.edu/home/about/mission. See also “American Evaluation Association Statement on Cultural Competence in Evaluation” (2011), which was developed by CREA members, https://www.eval.org/ccstatement, accessed June 25, 2018.

- Stafford Hood, Rodney Hopson, and Henry Frierson, eds., Continuing the Journey to Reposition Culture and Cultural Context in Evaluation Theory and Practice (Charlotte, NC: Information Age Publishing, 2015), ix.

- Ibid., xiii.

- Based on the work of Stafford Hood, Henry Frierson, Rodney Hopson, and other members of CREA, these components are adapted from the talk “Foundations of Culturally Responsive Evaluation,” given by Rodney K. Hopson and Karen E. Kirkhart at the Center for Culturally Responsive Evaluation and Assessment, Chicago, September 26, 2017.

- Ibid., 3.

- Ibid.

- Ibid.

- Ibid., 5.

- Katrina Bledsoe, telephone interview with author, June 27, 2018.

- Hazel Symonette, “Culturally Responsive Evaluation as a Resource for Helpful-Help,” in Continuing the Journey to Reposition Culture and Cultural Context in Evaluation Theory and Practice, ed. Hood, Hopson, and Frierson, 110.

- Hopson and Kirkhart, “Foundations of Culturally Responsive Evaluation,” 1.

- Ibid., 7.

- Ibid.

- Ibid.

- Ibid., 8.

- Bledsoe, interview.

- Hopson and Kirkhart, “Foundations of Culturally Responsive Evaluation,” 11.

- Ibid., 12.

- Symonette, “Culturally Responsive Evaluation as a Resource for Helpful-Help,” 111–12.